In the first article we introduced concept of workflow as a service that allows to encapsulate business logic as workflow which is then used to generate a self describing service. This time we shift focus to even smaller piece of business logic - a function - that is still represented as workflow.

Workflow as a function in Automatiko has two flavours

- Standalone function

- Function flow

The first one is rather straightforward as it makes the entire workflow to become a function. That means whenever it is invoked it expects to run complete workflow - from the start event to one or more end events. Its main purpose is to allow to build simple but well defined pieces of business logic. While it is expected that the function complete instantly it can still take advantage of various features of workflow such as error handling and retries.

On the other hand - function flow is more advanced option that breaks the workflow into functions that can be individually called. Though the main use case is to start the flow with one event and the rest will be invoked automatically based on context of the workflow instance which is the data.

It is based on a concept of context altering activities - this is essentially based on finding activities in the workflow definition that

actually change its data. For instance a service task or business rule task is such an activity while start, end or gateway (decision points in the workflow) is not.

Each context altering activity is then transformed into a function that can be invoked. Invocation of functions in the flow are always done though the

Knative broker that means it can scale pretty much to no limit as each invocation allows to evaluate the load and scale up if required.

Moreover since the workflow controls the execution (aka the flow) it can produce more than one event after execution. One of common examples is when workflow instance uses parallel gateway that spans into many paths - in such case function will emit as many events as the parallel paths. This in turn will call all these paths individually with complete context - data. In addition it also keeps track of the ids so each invocation can be tracked and identified.

While function itself is expected to be short lived and fast invocations, function flows can be long lived as well. That is possible due to the nature of workflow that can persist state in between the calls and be resumed by another incoming event carrying an identifier as part of the event.

As opposed to workflow as a service, function or function flow are not self describing in the same manner. That is due to the fact they rely on different technology. Function and function flow in Automatiko are implemented as functions with Quarkus Funqy framework.

Workflow as a function uses HTTP binding of funqy and function flow uses Knative binding with cloud events.

This enables a massive usage with various cloud providers as functions can be deployed to

- AWS lambda

- Azure functions

- Google cloud functions

- Or as standard http endpoint

Function flow can be deployed to any cloud offering that supports Knative so it can be

- Knative on Kubernetes cluster

- Google Cloud Run

- OpenShift serverless

Function flow builds up on top of cloud events as exchange format which makes the invocation easily portable between deployment environments. It allows to be bound to various protocols such as http, Apache Kafka, Apache camel and more. Yet not requiring any change in your business logic.

Even though functions and function flows are not self described as services they do come with helpful instructions on how the function endpoints look like and what are the expected event payloads. This comes with either build time instructions or Quarkus Dev UI addon.

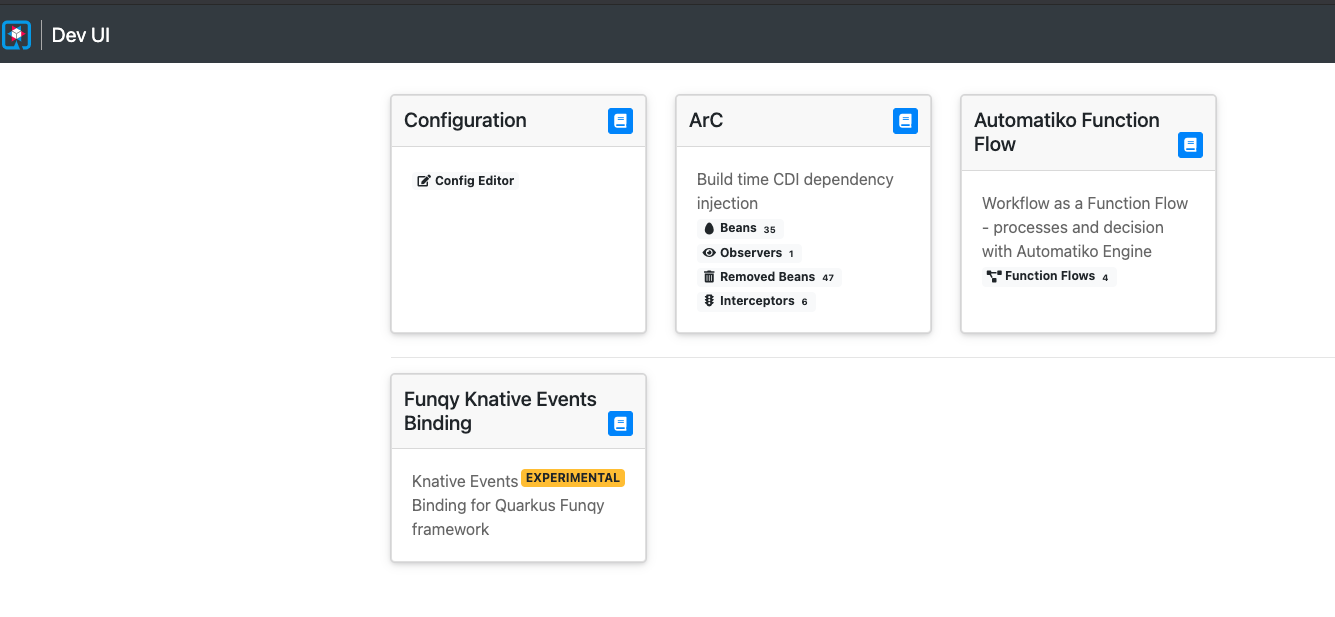

Automatiko integrated with Quarkus Dev UI.

Automatiko integrated with Quarkus Dev UI.

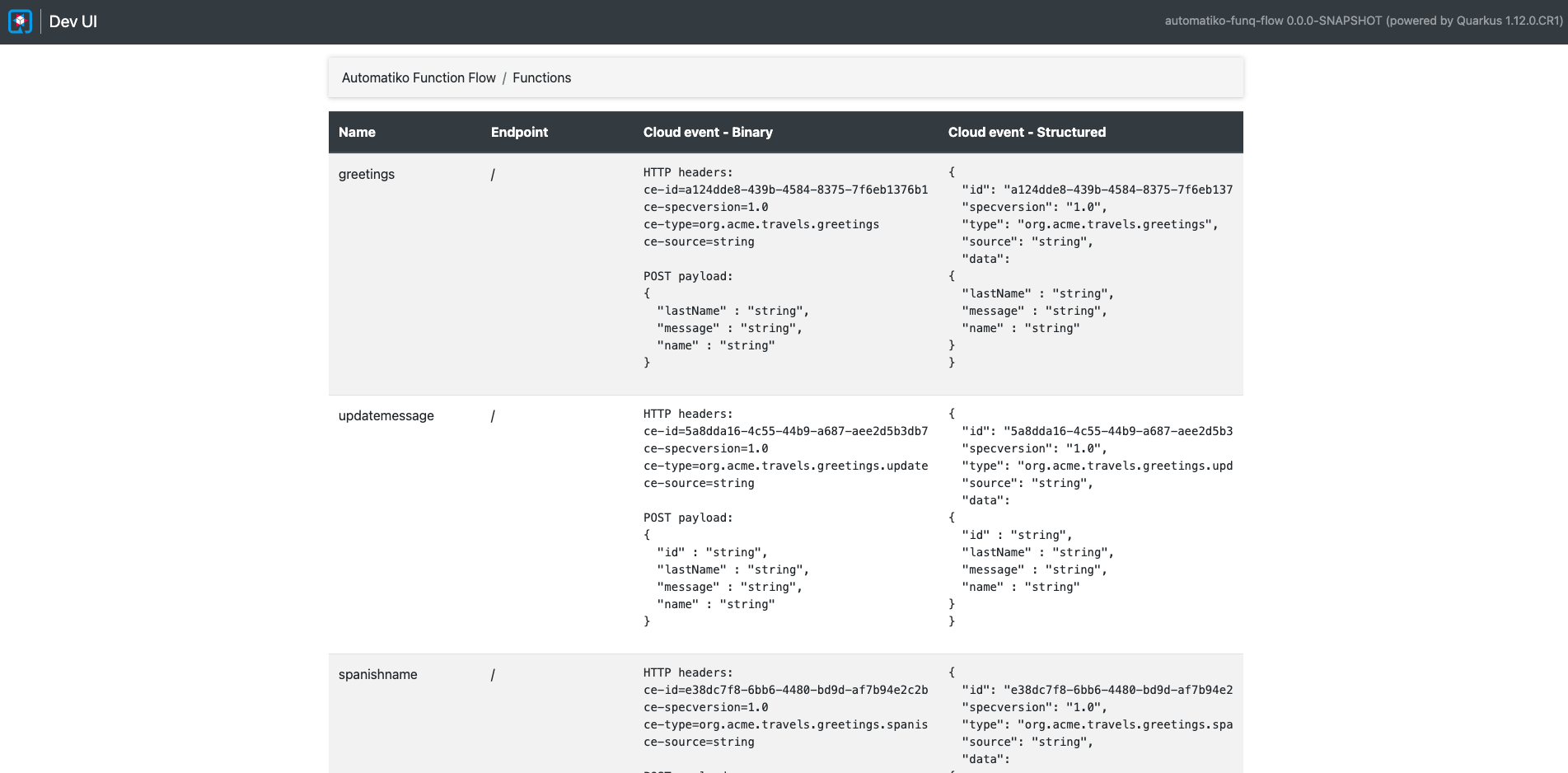

Quarkus dev UI of Automatiko helps with function flow endpoints.

Quarkus dev UI of Automatiko helps with function flow endpoints.

Limitations

Function and function flows are excellent choice for certain use cases but for sure not for all. There are limitations in type on workflow constructs you can use or the long running workflow instances are usually not the best fit. But if you need to have unlimited scalability and option to break the workflow into small pieces without the need of syncing on state then functions and function flows will certainly do the job.

Conclusion

Automatiko provides various options to use workflows depending on your use case. It can be short lived, instant execution but it might also be a year long instances that requires time based interaction, human actors involvement and more. So best to look at your problem to be solved from requirements point of view and based on that choose which option is the best. Feel free to drop a question if you are unsure we are more than happy to assist you.

Photographs by Unsplash.