We are pleased to announce a new release of Automatiko - 0.7.0

Highlights

- GraphQL service interface

- Multiple error codes on single error event

- Google Cloud Run integration simplified

- Sending emails correlated with workflow instance

- Receiving emails and correlate with workflow instance

- Use of functions inside data assignments

- Introducing Automatiko test support module

GraphQL service interface

Automatiko builds a REST service interface for every workflow definition. This is the main entry point to the service and exposes all possible operation as defined in the workflow. With 0.7.0 release users can enable to also expose the service as GraphQL interface (in addition to REST api).

A dedicated blog post will be soon published showing GraphQL interface of the service in details.

It comes with all the same features as REST api and additionally provide subscriptions for most common operations

- workflow instances created

- workflow instances completed

- workflow instances aborted

- workflow instances in error

/grapqhql endpoint.

GraphQL interface exposes queries, mutations and subscriptions for each workflow definition found in the service.

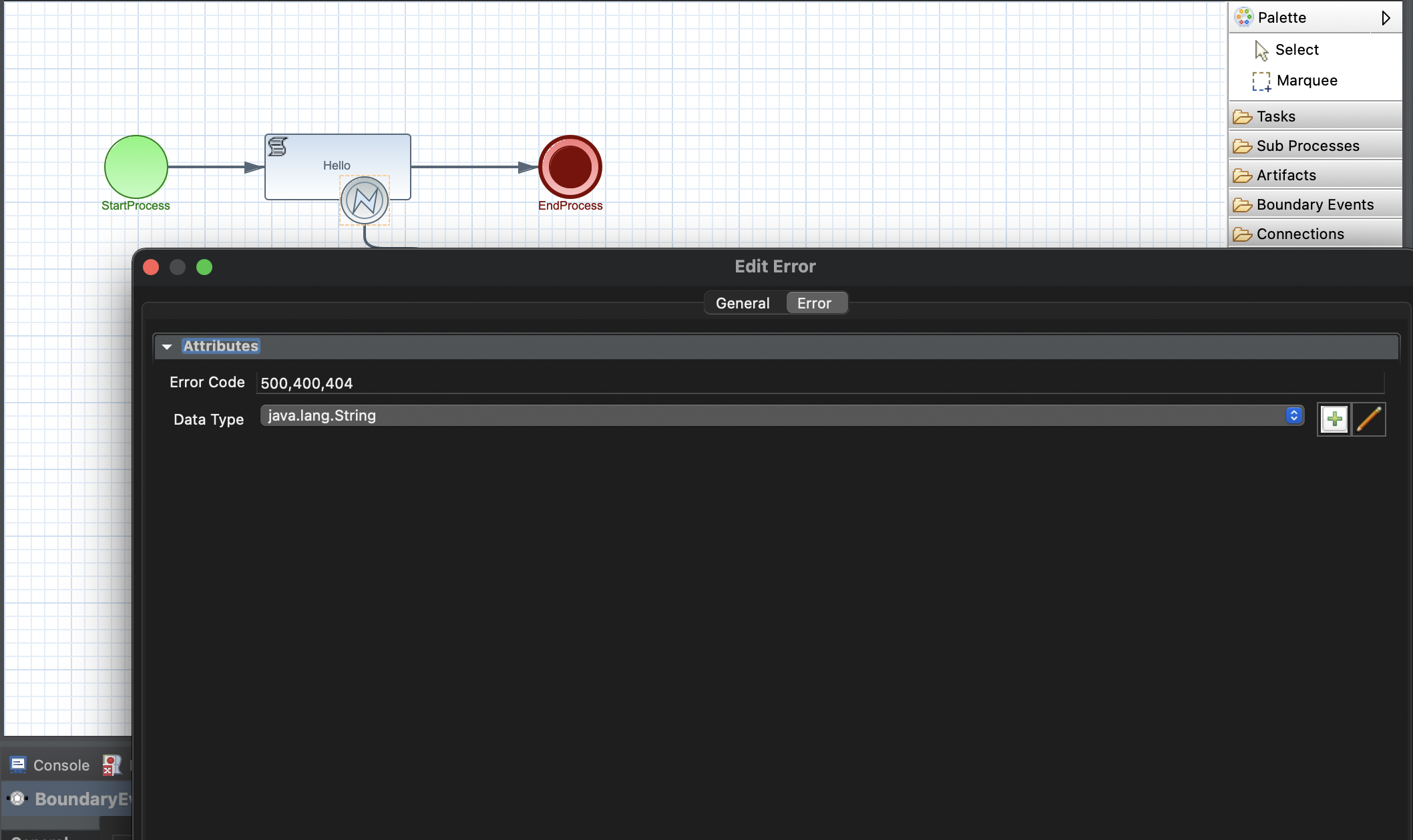

Multiple error codes on single error event

Sometimes when dealing with error handling, given service can return multiple error codes that the workflow logic should treat in the same way. Until now, users would have to define different error to deal with each error type identified by error code. With this release it is as simple as to specify list of error codes to handle as comma separated set of error code (as illustrated in the below picture).

Multiple error codes on single error.

Multiple error codes on single error.

Google Cloud Run integration simplified

Using workflow as a function flow together with Google Cloud Run has been introduced in 0.6.0 release. At that time there was a lot of additional steps needed to configure it and deploy. With this release this has been significantly simplified and requires only two things

- add dependency to pubsub sink

- configure deployment target and google project id in

application.properties

gcloud commands needed to deploy it, are also generated and can be found in

target/scripts folder. It comes with both deploy and undeploy scripts.

Sending emails correlated with workflow instance

Recently introduced SendEmailService addons service have been improved to send emails that are

correlated with workflow instance data. In most of the cases where workflow is sending emails there is a need

to keep track where does this email come from and in case a reply should be consumed by the same workflow

instance. With correlated emails this is really easy to achieve.

There are several new methods in the SendEmailService class that allows to pass correlation information

to be included in the outgoing email. This in turn allows to easily receive emails and associate it with the workflow instance

based on correlation information.

Receiving emails and correlate with workflow instance

As a new addon service, in similar area as sending email service a receive email service has been added in this release.

This service mainly focuses on connectivity for receiving emails as part of workflow definition which is expressed as

message events. In addition to that there are ready to use InputConverter that are responsible for mapping

email body into data objects that will be used within workflow definition. Here is a list of these input converters

io.automatiko.engine.addons.services.receiveemail.EmailInputConverter- a basic email extraction converter that will returnio.automatiko.engine.addons.services.receiveemail.EmailMessageio.automatiko.engine.addons.services.receiveemail.EmailAttachmentInputConverter- single attachment extraction that returnio.automatiko.engine.api.workflow.files.Fileio.automatiko.engine.addons.services.receiveemail.EmailAttachmentsInputConverter- list of attachment extraction that will returnList

Last but not least, the receive email addon service also provides functions that can be used in the correlation expression of the message event to easily correlate incoming email to workflow instance that is waiting for this email message. Have a look at Automatiko documentation for more information about receive email add on service.

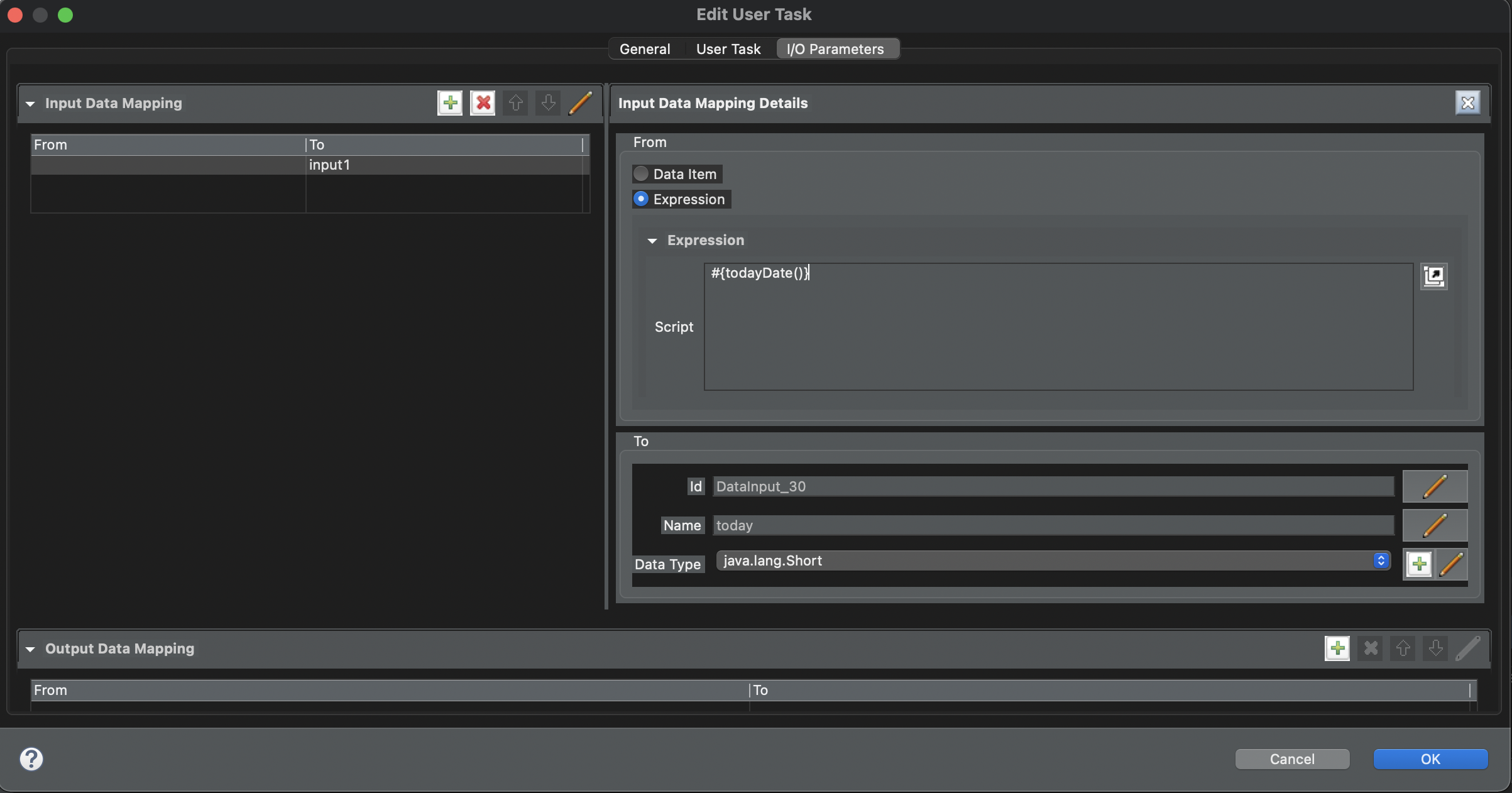

Use of functions inside data assignments

In many cases users need to place some kind of scripts or expressions within the workflow definition,

for example to express gateway (decision point) conditions that look at various workflow data items.

To make it more maintainable Automatiko introduced concepts of Functions. Functions are

essentially public static methods that encapsulate the logic behind the function and can be referenced inside

the workflow just by method name. Users can provide their own, custom functions by simply implementing

io.automatiko.engine.api.Functions marker interface. All static public methods from these classes

will be automatically available in workflow execution.

With 0.7.0 release, these functions can also be used within data mapping which helps significantly to reduce the

boilerplate code inside the workflow definition. These functions should be placed into data mapping as expression

wrapped with #{function} as illustrated in following picture.

Use of functions as expression in data mapping.

Use of functions as expression in data mapping.

Introducing Automatiko test support module

Testability of the code (regardless if hand written or generated) is essential. Automatiko from the very beginning supported end to end tests of services built based on workflow definitions. Though in some cases it was quite difficult or might introduce some instability - especially when dealing with so called jobs. Jobs are in many cases a background triggered task for instance timers. Thus testing them becomes a bit of an issue. More over if the timer is scheduled in the service to fire off once a week, it is quite difficult to test it repeatedly without the need to wait a week.

To address this problem, Automatiko 0.7.0 introduced a new module, completely dedicated to testing

io.automatiko.quarkus:automatiko-test-support. The main part that it comes with is an alternative

implementation of JobsService that is suited for testing as it enables easy way to get hold of currently

scheduled jobs and to trigger them.

In addition it also comes with dedicated QuarkusTestProfile implementation that configures it automatically.

See below a snippet how to make use of it (first make sure to add the automatiko-test-support module to your project as dependency)

@QuarkusTest

@TestProfile(AutomatikoTestProfile.class)

public class VerificationTest {

@Inject

TestJobService jobService;

...

@Test

public void testTimerWorkflow() throws Exception {

Set jobs = jobService.jobIds();

assertEquals(1, jobs.size());

jobService.triggerProcessJob(jobs.iterator().next());

...

}

Photographs by Unsplash.