Workflow as a function flow powered by completely managed service - Google Cloud Run

Introduction

This is a follow up blog post about Workflow as a function flow on Google Cloud Run that focuses on 0.7.0 release that brings significant improvements in the area.

Workflow as a Function Flow is a powerful concept that allows to build business logic as complete, end to end use case but takes advantage of serverless platforms based on Knative to provide highly scalable and resilient infrastructure.

Workflow as a Function Flow by default communicates directly with Knative Broker to publish events and by that trigger function invocation. This is not possible in managed service such as Google Cloud Run and thus to be able to run with same characteristics use of Google PubSub is introduced. This enforces some additional configuration steps as relying on Cloud Event type attribute as trigger is not enough.

In addition to the type attribute that is the main trigger, a source attribute of a Cloud event is also used. Luckily, Cloud Run with PubSub associates topic information (where the message come from) into the source attribute of the Cloud Event delivered by Cloud Run. This in turn is used by Automatiko to look up proper function to invoke based on the Cloud event attributes (type and source). Due to that, workflow and its nodes that compose the function must be additionally annotated to provide the topic information as a source attribute to filter on. All the details can be found in the Configuration section of this article.

A complete example can be found in this repository

Get started with Google Cloud Run

Have a look at the Google Cloud Run documentation to get started with the offering as such. Once you have environment ready to use you can try it out with Automatiko service running as Function Flow.

Configuration

Dependencies

Configuration of the project is rather simple, once you create a project based on

automatiko-function-flow-archetype there is one additional dependency needed

<dependency>

<groupId>io.automatiko.extras</groupId>

<artifactId>automatiko-gcp-pubsub-sink</artifactId>

</dependency>

This dependency brings in Google PubSub event sink that will publish events for each function invocation during the instance execution. All data will flow through the PubSub topics, thus they must be created in advance.

Project configuration

Project configuration that resides inside src/main/resources/application.properties requires to have following defined

quarkus.automatiko.target-deployment=gcp-pubsub

quarkus.google.cloud.project-id=YOUR_PROJECT_ID

YOUR_PROJECT_ID must be replaced with actual project id that this service will be deployed to.

Build and Deployment

Once the configuration of the service is done and Google Cloud platform account (topics included) it's time to build it and deploy.To build it it's as easy as just running single command.

mvn clean package -Pcontainer-native

NOTE: This command requires to have Docker installed as it will build docker container wiht native image in it.

Once the service is built as docker image you can push it out to container registry. As an example you can follow these two commands to push it to Google Cloud Platform container registry.

docker tag IMAGE gcr.io/GOOGLE_PROJECT_ID/automatiko/user-registration-gcp-cloudrun:latest

docker push gcr.io/GOOGLE_PROJECT_ID/automatiko/user-registration-gcp-cloudrun:latest

Following must be replaced with actual values matching your service and Google Project

- IMAGE - image id of the built container image

- GOOGLE_PROJECT_ID - id if your Google Cloud Platform project

- automatiko/user-registration-gcp-cloudrun - container image name

- latest - container image tag

gcloud scripts.

These scripts are located inside target/scripts folder where you can find both deploy and undeploy scripts.

A sample deploy script is illustrated below.

gcloud pubsub topics create io.automatiko.examples.userRegistration.registrationfailed --project=CHANGE_ME

gcloud pubsub topics create io.automatiko.examples.userRegistration.notifyservererror --project=CHANGE_ME

gcloud pubsub topics create io.automatiko.examples.userRegistration.userregistered --project=CHANGE_ME

gcloud pubsub topics create io.automatiko.examples.userRegistration.notifyregistered --project=CHANGE_ME

gcloud pubsub topics create io.automatiko.examples.userRegistration.registeruser --project=CHANGE_ME

gcloud pubsub topics create io.automatiko.examples.userRegistration.invaliddata --project=CHANGE_ME

gcloud pubsub topics create io.automatiko.examples.userRegistration.generateusernameandpassword --project=CHANGE_ME

gcloud pubsub topics create io.automatiko.examples.userRegistration.alreadyregistered --project=CHANGE_ME

gcloud pubsub topics create io.automatiko.examples.userRegistration.getuser --project=CHANGE_ME

gcloud pubsub topics create io.automatiko.examples.userRegistration --project=CHANGE_ME

gcloud eventarc triggers create notifyservererror --event-filters="type=google.cloud.pubsub.topic.v1.messagePublished" --destination-run-service=user-registration-gcp-cloudrun --destination-run-path=/ --transport-topic=io.automatiko.examples.userRegistration.notifyservererror --location=us-central1

gcloud eventarc triggers create notifyregistered --event-filters="type=google.cloud.pubsub.topic.v1.messagePublished" --destination-run-service=user-registration-gcp-cloudrun --destination-run-path=/ --transport-topic=io.automatiko.examples.userRegistration.notifyregistered --location=us-central1

gcloud eventarc triggers create registeruser --event-filters="type=google.cloud.pubsub.topic.v1.messagePublished" --destination-run-service=user-registration-gcp-cloudrun --destination-run-path=/ --transport-topic=io.automatiko.examples.userRegistration.registeruser --location=us-central1

gcloud eventarc triggers create generateusernameandpassword --event-filters="type=google.cloud.pubsub.topic.v1.messagePublished" --destination-run-service=user-registration-gcp-cloudrun --destination-run-path=/ --transport-topic=io.automatiko.examples.userRegistration.generateusernameandpassword --location=us-central1

gcloud eventarc triggers create getuser --event-filters="type=google.cloud.pubsub.topic.v1.messagePublished" --destination-run-service=user-registration-gcp-cloudrun --destination-run-path=/ --transport-topic=io.automatiko.examples.userRegistration.getuser --location=us-central1

gcloud eventarc triggers create userregistration --event-filters="type=google.cloud.pubsub.topic.v1.messagePublished" --destination-run-service=user-registration-gcp-cloudrun --destination-run-path=/ --transport-topic=io.automatiko.examples.userRegistration --location=us-central1

gcloud run deploy user-registration-gcp-cloudrun --platform=managed --image=gcr.io/CHANGE_ME/automatiko/user-registration-gcp-cloudrun:0.0.0-SNAPSHOT --region=us-central1

These scripts are complete set of commands to successfully push a workflow as a function flow service to Google Cloud Run.

Execution

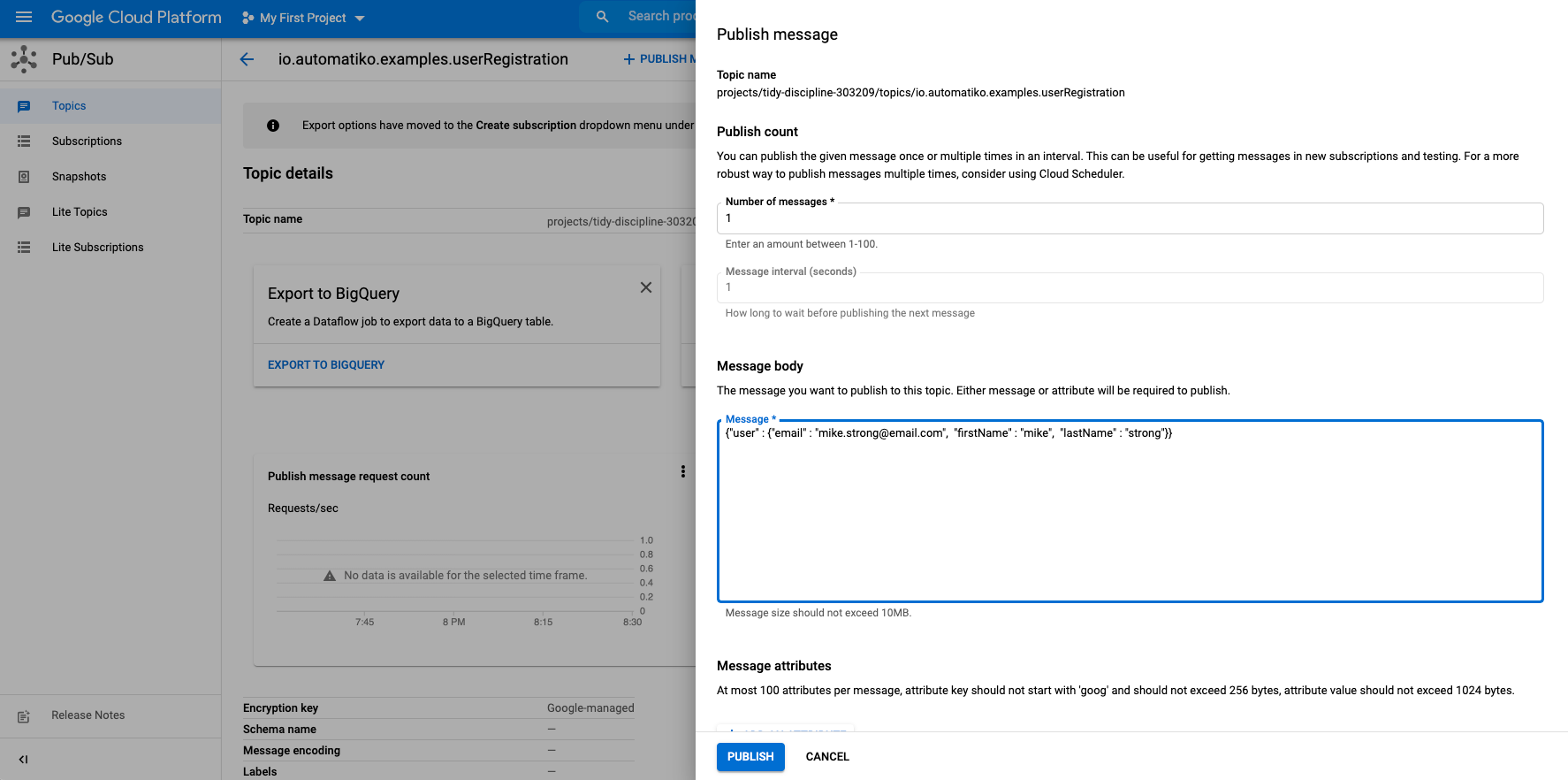

To start workflow execution (which will execute as function flow) publish new message to the topic that is linked with the workflow. Publish message to start workflow execution.

Publish message to start workflow execution.

Conclusion

This article introduced you to use Automatiko and its Workflow as a Function Flow to execute on Google Cloud Run as a managed Knative environment. At the same time to reduce limitation of having direct access to Knative Broker, it uses Google PubSub as the way of exchanging events to provide efficient and highly scalable infrastructure.

This is just the beginning and number of improvements are in the works such as automatic generation of scripts to create topics and deploy the service with triggers. So stay tuned and let us know (via twitter or mailing list) what would you like to see more in this area.

Photographs by Unsplash.